Book review: The Little Black Book of Data and Democracy by Kyle Taylor

We swim in a sea of data. Our digital devices know where we’ve been, who we’ve communicated with, what we’ve looked at and how we’ve responded. As a result, each one of us is an open book. An open Facebook, indeed, or something similar. And we’ve allowed this to happen because we wanted to have… Continue reading

We swim in a sea of data. Our digital devices know where we’ve been, who we’ve communicated with, what we’ve looked at and how we’ve responded. As a result, each one of us is an open book. An open Facebook, indeed, or something similar. And we’ve allowed this to happen because we wanted to have fun toys and play with cool stuff for free.

People have been warning us about the risks of giving so much away. And politicians have promised to protect us from the consequences of our idle generosity. But it’s probably far too late. The problem is not just a personal one: it’s threatening the very fabric of our democracy.

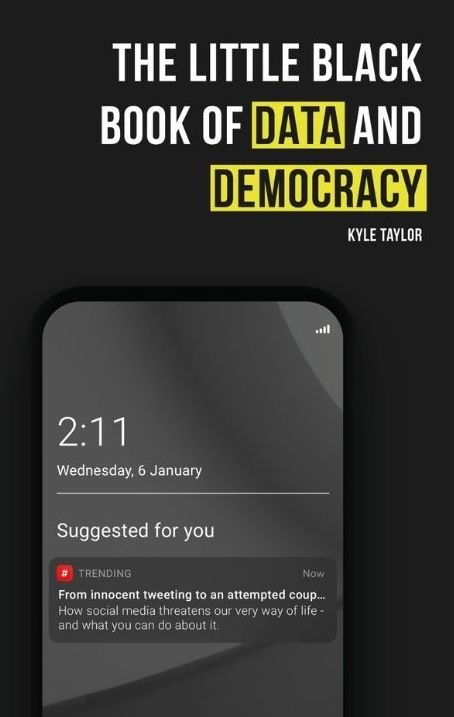

In his short, clear, persuasive book Kyle Taylor sets out to demystify the complex relationship between personal data, big tech and political power, and to help us “understand how our very way of life is under threat and what you can do about it before it’s too late”.

It’s both a useful primer and an urgent reminder.

We are the product

The author comes from a political campaigning background, where he once saw personal data as a way to understand voters better in order to improve communication to them about the issues. Now, after closely analysing the Cambridge Analytica scandal and what went wrong in the 2016 US Presidential Election and the UK Brexit campaign, he feels very differently:

“Access to more information – made possible by technology and so-called ‘social’ media – has, in less than a decade, shifted the planet from a world existing in one shared reality to one that can’t even agree on the most basic truths. Feelings are conflated with facts. Facts can now be ‘alternative’. Whatever you ‘like’ is true. Whatever you don’t like is ‘fake news’. The technologies that were meant to bring the world together have instead driven us deep into echo chambers…”

He begins by explaining how social media works, by appearing to offer users a chance to talk about themselves and engage with others, but then manipulates them into more extreme positions and more emotionally triggering content. This in turn holds their attention for longer while collecting yet more data and exploiting it and them for the purpose of more highly targeted advertising.

And of course we think it’s all free but it isn’t. We’re not paying for the product because we are the product. We are the thing that’s being sold. And we’re addicted. So the question is, to whom are we being sold? Is it just commercial advertisers, who want to sell us cool stuff that we might have wanted anyway? Or is it a political faction or foreign power whose “stuff” we probably wouldn’t?

Cookie monsters

Taylor explains why cookies are not so delicious after all, and how the choice as to whether to accept them is often largely illusory. And here’s the thing: the cookie that’s supposed to remember your choice of which cookies you don’t want to accept is the one that almost never remembers, so every time you visit the same site you need to go through the whole process again.

Even when you do something as simple as searching on Google, the data Google already has about you helps them to prioritise and rank the results they show you. And they know more about you because they also own YouTube, just as Facebook collects more information, even if you aren’t on Facebook, by also owning WhatsApp. As for Amazon, as well as knowing a lot about your shopping preferences just from watching you shop, with interactive devices like Alexa they can listen to your every conversation and monitor all the devices in your home. How cool is that? (Not in my home, by the way, but maybe my Firestick or smartphone is doing the same thing anyway?) How cool is a smart fridge that knows when you’re running out of milk and can order some more online? How cool is a doorbell that tracks who’s walking past your house? How cool is it to talk about your most intimate relationship in private – only to find birth control products being advertised next time you open a website?

Your face is their fortune

Then there are things like FaceApp, a Russian developed phone app that uses artificial intelligence (AI) to playfully recreate your face, to make you look weirdly old or younger. What a giggle, sounds like harmless fun, and hey – it’s free! But all the while it’s collecting facial recognition data, as are Face ID and all the photo apps that enable tagging, and the other end of this wedge is the Chinese state’s ability to identify individuals who transgress minor laws, by jaywalking or littering in the street, and mark down their social credits accordingly. Not quite so funny.

Taylor reports that facial recognition algorithms can detect not just who we are, but how we are feeling: happy, sad, angry or fearful. In a questionable reincarnation of the 19th century pseudoscience of phrenology (which claimed to detect criminality from the shape of people’s heads), AI can now even detect a person’s sexuality just by scanning photos of their face. What if they could detect criminal intent or even guilt? The implications, for civil liberties and accountable justice, are very concerning.

The public sphere

All this is scary enough, but what is the effect on the “public sphere” – the shared common assumptions on which we make political decisions and the shared space in which we discuss them? This is now being polluted by the misinformation spread by social media and polarised by its promotion of conflict and exaggeration. It is also easy prey for deliberate campaigns of disinformation, which are spread like a virus from person to person, often promoted by fake social media accounts using “bots” (ie fake automated or robotic accounts). Taylor says “it is estimated that bots are responsible for 70% of Covid-19 activity on Twitter”. Combined with the tendency of social media to fuel your addiction by continually validating your feelings, they create the impression of an alternative reality, which many of us are sadly happy to opt into.

Having diagnosed the many and increasing problems with data abuse, Taylor concludes with an admittedly rather slender section on “What You Can Do About It”, which includes changing settings on your smart devices and browsers, as well as more activist things, like reporting bots and campaigning for better regulation. I felt this section could have been more comprehensive, but ultimately the book is about raising people’s awareness of the problems, rather than trying to solve them all; and since one of those problems is people’s complacency, it is probably a good thing just to give everyone a bit of a fright.

The Little Black Book of Data and Democracy by Kyle Taylor (Byline Books, £9.99, ebook available on Amazon, Google Books etc)

Featured image via Shutterstock.